[Home]

[Parent directory]

[Search]

my_projects/my_projects.html

2025-08-30

My projects

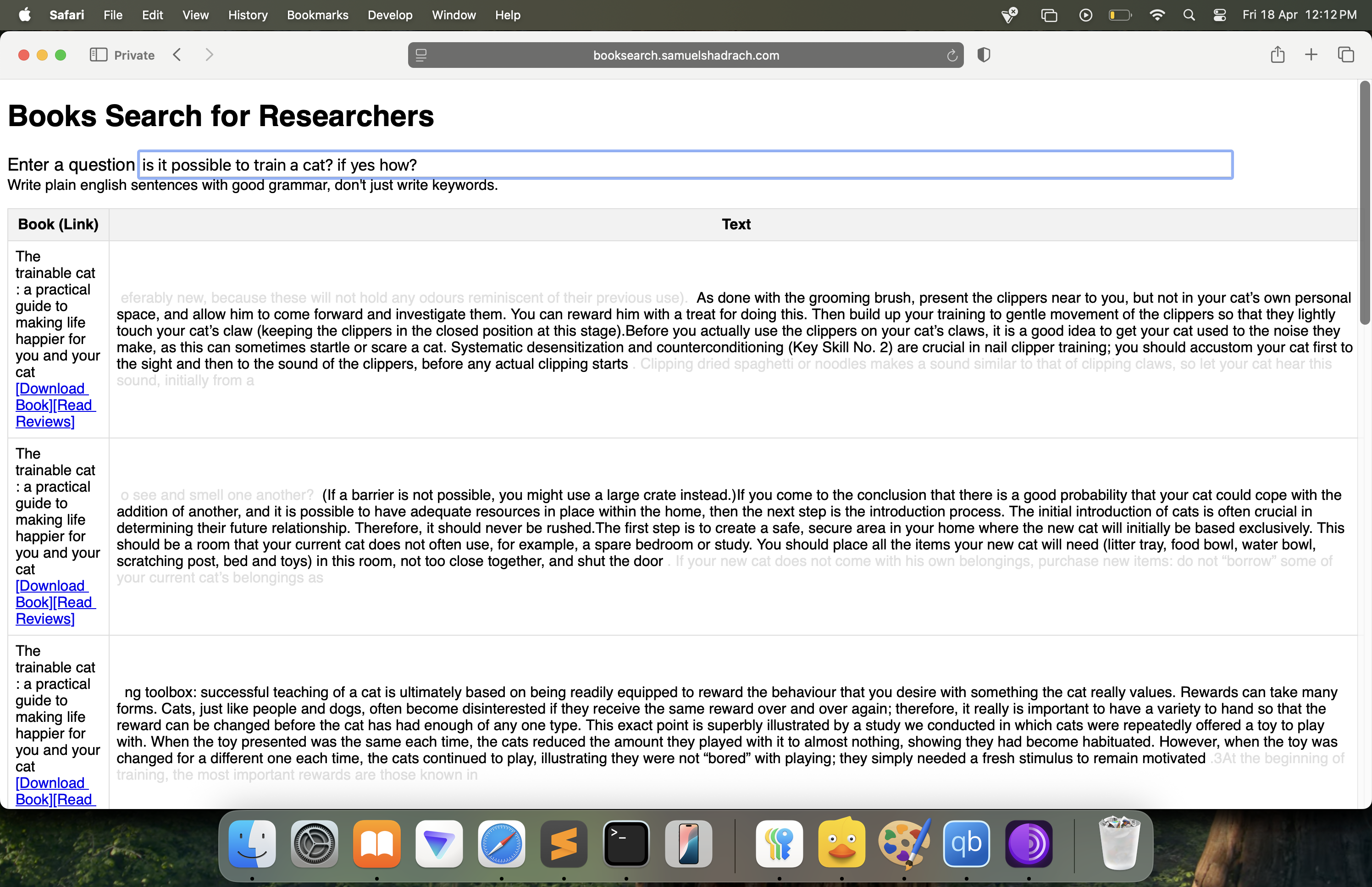

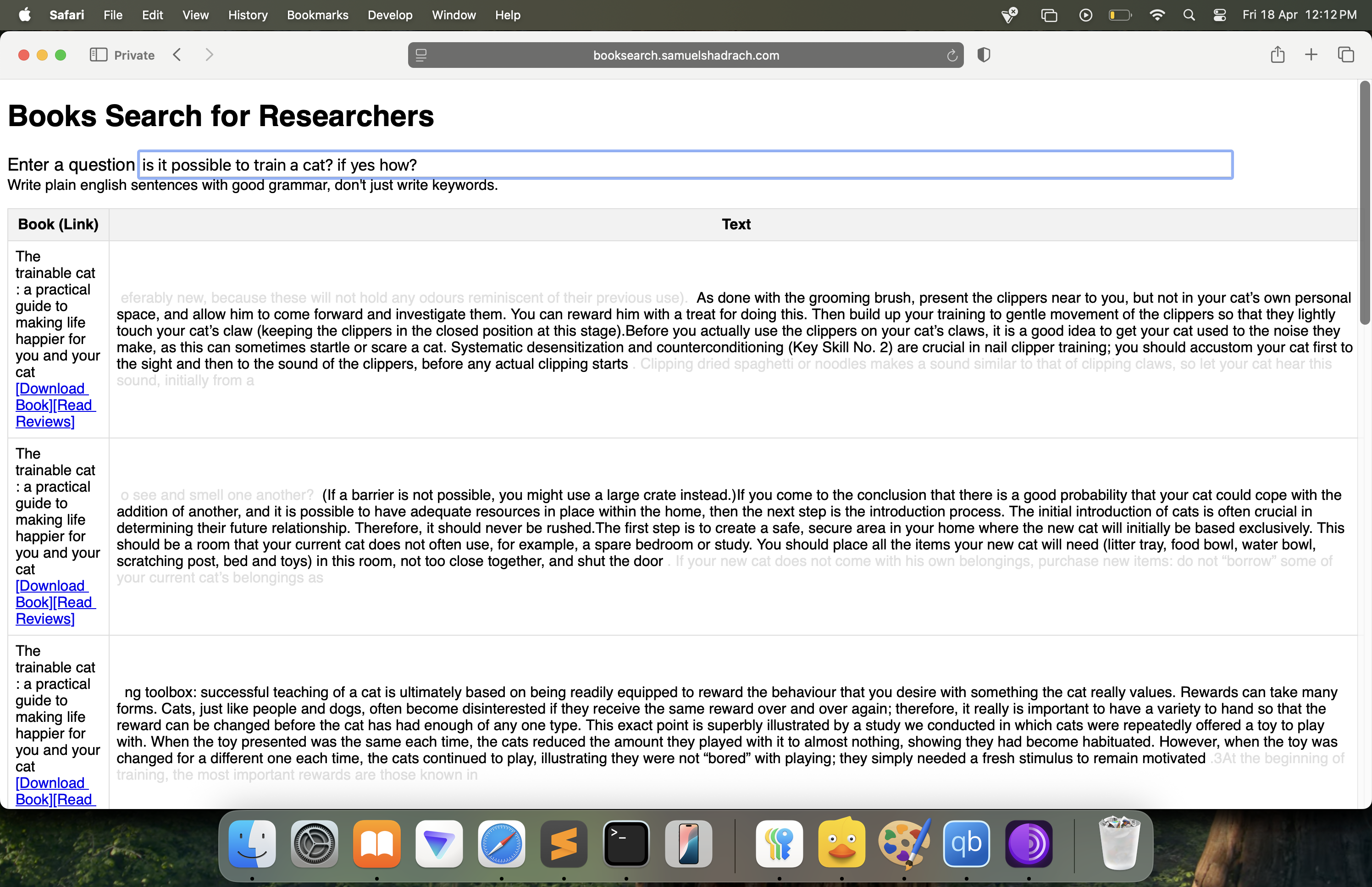

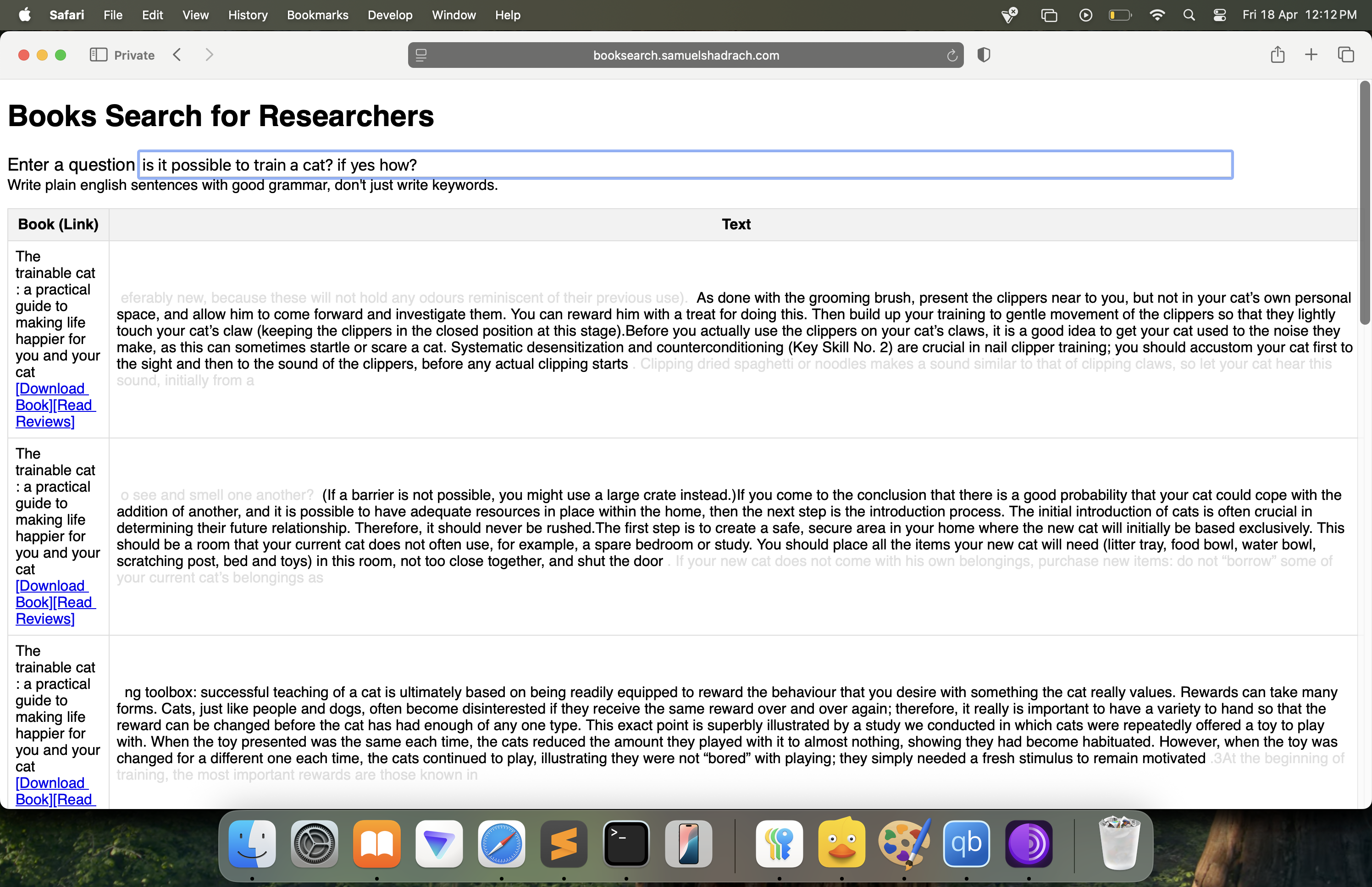

Search Engine for Books

About project

- Time duration of project: 2024-07 to 2025-01. also implemented qdrant 1bit quantisation in 2025-08

- Github: OpenAI Batch Queue

- Not hosting anymore due to hosting cost. If you can pay me $50/mo, I can host it. I can also offer free trial for a few hours.

- Uses AI (openai text-embedding-3-small) to search a large collection of books (libgen english epubs). Intended for researchers

- Contact me

More notes

- Target market

- Update (2025-06-05): HN post with 250+ upvotes says there's demand for this. Is there???

- I'm guessing this will be more useful for people who want to do intermediate-level of research of existing work.

- Basic: If you've not already done 50 google searches and quickly skimmed through top 3 standard books (let's say reddit recommendations) on a topic, you might want to go do that first before using this search engine.

- Intermediate: If you've already done this much basic research and want more recommendations, then this search engine is for you.

- Advanced: Let's say you're a PhD researcher who has already spent multiple years on a topic, and you already have spent significant time designing a custom solution for how to filter the latest papers. This search engine is likely not as useful for you. But it couldn't hurt to try.

- Budget

- Total spent out-of-pocket so far = ~$2600 = ~$1000 (openai embedding API) + ~$1600 (CPU, disk, bandwidth etc)

- Ongoing spend = $26/mo ($24/mo hetzner + $2/mo aws s3 DA; storing embeddings and snapshots, in case someone wants to host this in future)

- Developer notes

- Dataset = ~2 TB embeddings ~300M vectors; from ~300 GB plaintext; from ~7 TB ~700k unique english epubs; selected from ~65 TB libgen database

- Embedding model = openai text-embedding-3-small

- Database and search algo = Qdrant (cheap, disk-based embedding search), DragonflyDB (expensive, RAM-based embedding search). Both tested. Qdrant is fast if using 1-bit quantised and slow otherwise due to disk speed; 1-bit quantised gives high search accuracy.

- Languages/Frameworks used = perl, bash, nginx, .... mojolicious, jq, htmlq, gnu parallel,

- More Developer notes

- Used bash and perl pipelines in all steps (extracing plaintext from epubs, converting to openai jsonl format, queueing them for openai servers, loading results into DB) to max out disk throughput

- Abandoned implementation in nodejs and python in order to avoid memory overflow and increase disk throughput.

- Had to figure out some tricks to ensure the entire codebase operates as pipeline, not batch-wise. For instance unzipping epubs in memory not disk to avoid hitting disk I/O limits.

- OpenAI BatchAPI rate limit documentation is bad, had to figure out some hacks like sending 25 "requests" per batch file, 2048 strings per "request", 20 batch files at a time.

- This allowed me to process the queue in 2 weeks instead of 6 months.

- Used OpenAI text-embedding-3-small

- Abandoned an open source model on rented vast.ai GPU due to bad search accuracy. Realised many embedding models are overfit to MTEB and perform poorly on real data.

- Hetzner + qdrant with 1 bit quantisation worked out cheapest. Even Hetzner + DragonflyDB is far cheaper than most hosted embedding search solutions as of 2025-08.

- Used and then abandoned Pinecone due to cost.

- Byte numbers used for indexing.

- Wrote and then abandoned my own custom CFI parser in javascript. Realised that since there's no reference CFI spec, every library does its own custom implementation that doesn't match spec. Hence the spec is not worth following.

- Research into better embedding search.

- Realised embedding search is better than finetuning, prompt injection or any other method as of 2025-01.

- As of 2025-05 I think the bottleneck to faster embedding search on > 100 GB plaintext is a cloud provider that hosts fast disks. Disks with > 8 GB/s sequential read speed are available for consumers but cloud providers are still stuck with 1 GB/s.

- Understood different state-of-the-art embedding search algos and implementations such as microsoft diskANN, google scANN, FAISS. Implemented locality-sensitive hashing. Understood why in-memory databases are difficult to program. Understood why graph-based methods (like HNSW) outperform geometric-based methods (like LSH). More notes on this on my website or elsewhere.

Real-time voice translation

Time duration of project: 1-3 days

Objective:

- Translate Alice's voice for Bob to hear in Bob's language. Translate Bob's voice for Alice to hear in Alice's language.

- Neither person should hear translation of their own voice.

- Alice and Bob could be in the same room physically or in different rooms.

- Neither person should hear noise due to closed loop between a mic and speaker.

Zero-code solution

- Open: Realtime API in openAI playground in macOS Safari. Input: macOS mic

- Open: Zoom. Input: Loopback Audio. Output: macOS speaker

- Open: Rogue Amoeba Loopback.app. Create new device. Safari 1&2 -> Channels 1&2

Do this on only one device for translation one way. Do this on both devices for translation both ways.

Once you have this setup working, you can also connect headphones for better noise cancellation if both people are in the same room. Only change required is Zoom Output: Headphone

Prepend each prompt with "translate to French/Chinese/etc" either by speaking these 3 words aloud, or by writing an app that can do it automatically. (I can host this if there's demand.)

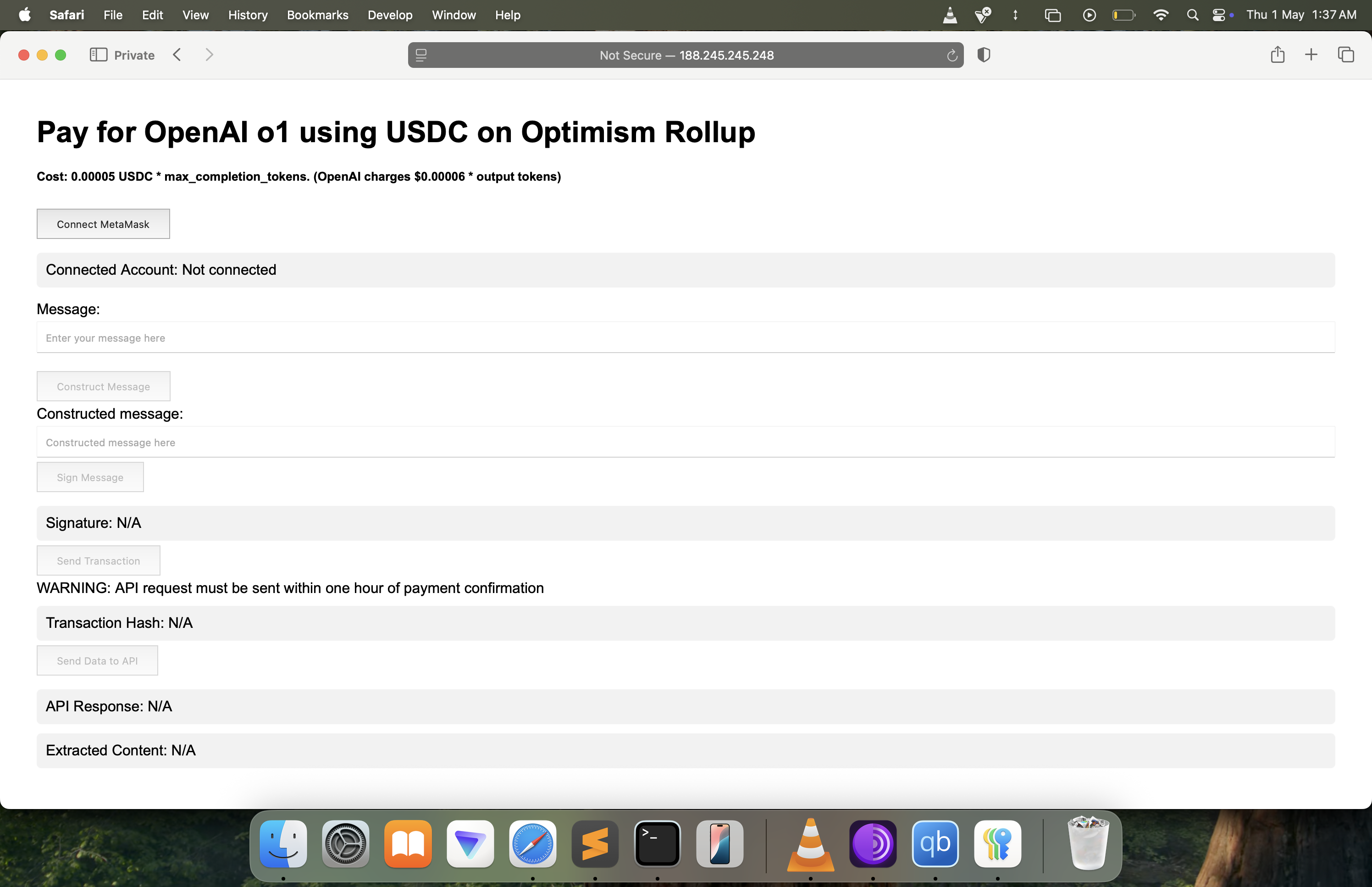

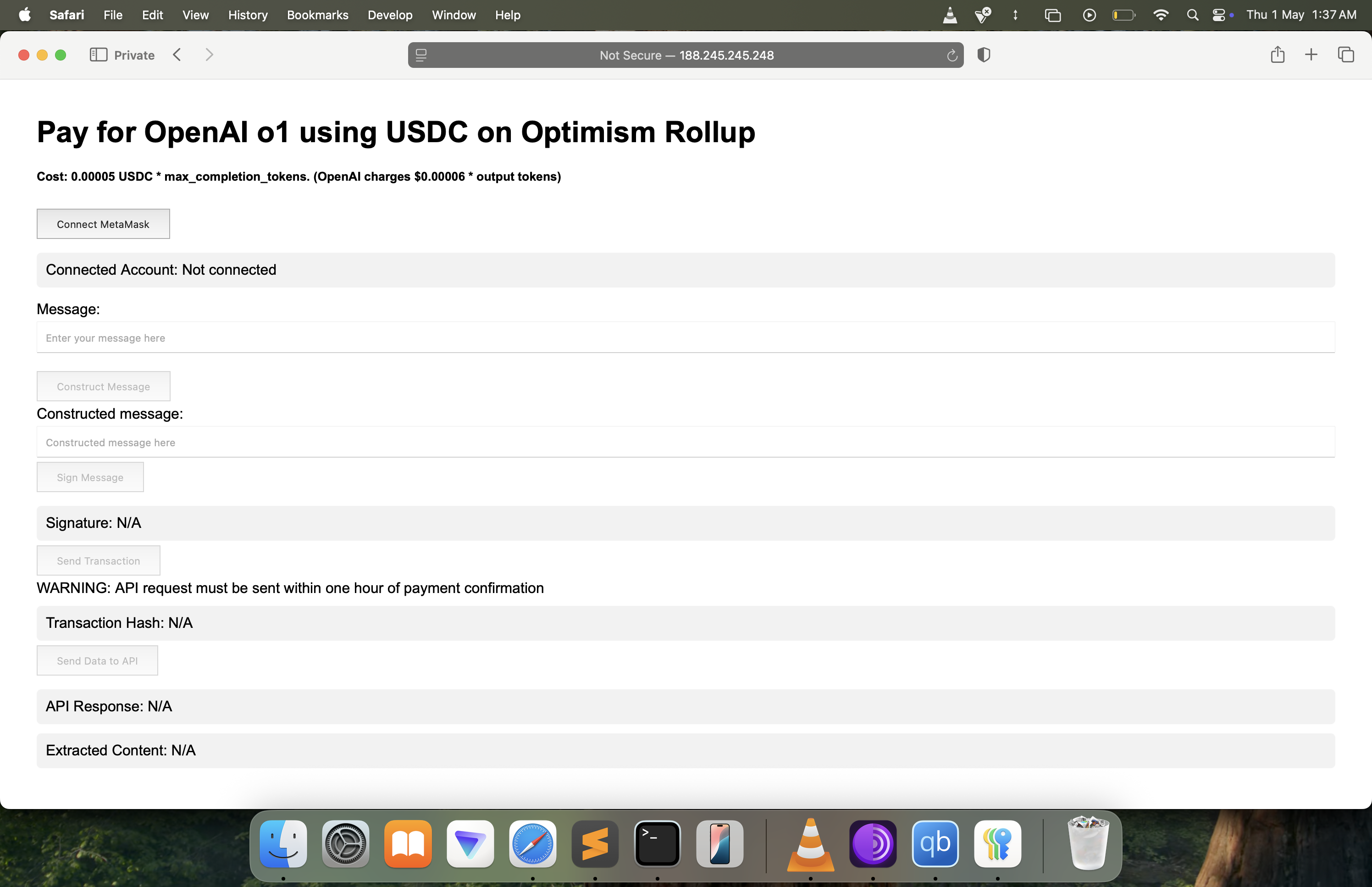

Tokens for tokens

Time duration of project: 1-3 days

- Not hosting anymore due to lack of user demand

- OpenRouter offers the same feature now

- Pay for OpenAI API using cryptocurrency, at discounted rate, anonymously

- AI model: openai o1; Payment provider: Optimism Rollup (less tx fees compared to ethereum mainnet); Currency supported: USDC

Screenshots

Subscribe

Enter email or phone number to subscribe. You will receive atmost one update per month