[Home]

[Parent directory]

[Search]

connect_with_me/donate_to_me_ai_whistleblowers.html

Minor update

- Made minor edit on 2025-10-16

- Also as of 2025-10-16, I now think project 2 (improving opsec of journalists) is not as valuable as project 1 (improving opsec of whistleblowers)

- The AI race could lead to a hot war between US and China. If there is no hot war however, it seems likely US mainstream media houses will be willing to publish classified documents pertaining to AI risk.

- US mainstream media houses have made lapses in opsec before. However, if the whistleblower has done their job correctly in redacting information before sending it to a journalist, this should not matter as much.

- I am still excited about media houses reporting on US issues while maintaining personnel and offices outside US geopoltical sphere of influence. Outside the sphere probably includes India, China, Russia and Pakistan unless AI takeoff also topples the current nuclear weapon-based world order.

- However I am less optimistic on impact of project 2 in the exact way it has been phrased below, than I was a few months back.

2025-06-03

Funding request - enable whistleblowers at AI companies

Disclaimer

- Quick note

- Might update document as I get more info

- Contains politically sensitive info

- I wrote this document mainly as reference to make funding applications to a number of institutional funders in the AI x-risk space.

Social proof, comments and feedback received

[REDACTED]

All details not published for privacy reasons. Message me for more details.

Project description

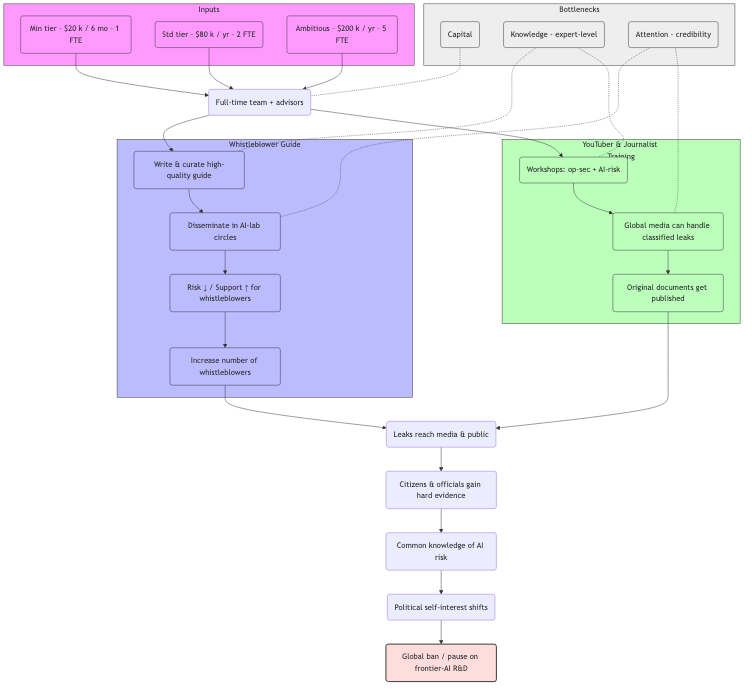

Project 1

- Publish a guide for whistleblowers in AI companies leaking classified information.

- Guide will include all knowledge that is relevant to a would-be whistleblower including technical knowledge on opsec, legal knowledge, geopolitical knowledge, knowledge on dealing with journalists and mental health resources.

- Assume that AI companies will have classified some of this information. Assume that best strategy for such a whistleblower is breaking US law and escaping to a country like Russia, not attempting a legal defence within US.

- Example: Edward Snowden

Project 2

- Provide youtubers and journalists with technical education on a) opsec for handling leaked documents including classified info b) AI risk

- I am primarily focussed on onboarding atleast 2-3 popular youtubers and journalists located in each US-allied nuclear state (US, UK, France) and each US-non-allied nuclear state (Russia, China, India, Pakistan, Israel).

Work done on this project so far

Project 1

Incomplete drafts: database, guide

This is like v0.1, expect final version to be based a lot more on historical data and consensus of expert opinions, and less on my personal opinions.

Project 2

Not yet started. Just made lists of journalists and youtubers I'd like to contact in ideal world.

Funding requested

Minimum: $20k for 6 months for 1 full-time founder (me)

- Shift to SF

- Main

- Helps with personal motivation

- Build credibility for my public brand

- Obtain in-person networking opportunities

- Get expert feedback on whistleblower guide from following sets of people. Feedback is easier to obtain in-person if I'm physically present in the US.

- People in AI risk community. (Very US-centric.)

- People working in AI labs, including potential whistleblowers. (Very US-centric.)

- Journalists covering AI labs. (US-centric but not exclusively US.)

- Cybersecurity experts. (US-centric but not exclusively US.)

- Legal experts in US law and international law. (Very US-centric.)

- Past whistleblowers of other organisations in the US. (US-centric but not exclusively US.)

- Provide opsec education and AI risk education to journalists and youtubers

- Sales pitches generally work best in-person.

- Capital raise increases brand value, which makes sales pitch more effective.

Standard: $80k for 1 year for 2 full-time cofounders (me and a cofounder)

- Same as above

- Also: will search for a full-time cofounder

- Will help with personal motivation

- WIll help with filling in knowledge gaps, such as lack of legal knowledge

Ambitious: $200k for 1 year for 5 full-time team members

- Same as above

- Also: Will demarcate roles more clearly. My current guess: 2 people will only work full-time on the whistleblower guide. 3 people will only work full-time on networking and sales pitches to journalists.

- In-person networking is hard to scale and can benefit from multiple people to putting in full-time work.

- In particular, wish to contact youtubers and journalists with a sales pitch on improving their opsec and AI risk knowledge. This can require extensive in-person effort.

Bottlenecks

Bottlenecks for project 1

- Capital

- See above for how capital will accelerate and improve quality of this project.

- Not blocked by lack of capital. It is possible to complete first draft of this project without capital.

- Attention

- If project builds enough credibility, can get expert advice from cybersecurity experts, journalists, lawyers, political strategists and others who might provide the advice voluntarily for alruistic reasons.

- Knowledge

- Requires expert knowledge in a variety of areas.

- I have above-average knowledge on:

- cybersecurity and opsec

- I can review multiple approaches taken by different projects and their tradeoffs

- historical whistleblower cases

- I have below-average (but greater than zero) knowledge on:

- US law, international law

- whistleblower guide needs in-depth legal knowledge

- geopolitics

- psychology

- whistleblower may need mental health reasons provided by the guide, as it is too risky for them to hire a psychiatrist.

- newswriting, media training

- whistleblower may need media training to persuasively argue their case in front of the public. This can then be posted to youtube or similar platform.

Bottlenecks for project 2

- Capital

- See above for how capital will help.

- Not blocked by lack of capital. It is possible to complete first draft of this project without capital.

- Attention

- If project builds enough credibility, can work directly with existing youtubers and journalists. Not mandatory to acquire public attention from scratch.

- It is typically in a youtuber's or journalist's self-interest to publish documents before the others do it.

- However, for publishing classified information, they may face significant risks to their career and reputation. (See case studies for more.)

- Knowledge

- I have above-average technical knowledge on:

- cybersecurity and opsec.

- For instance, teaching youtubers to operate a securedrop server or similar setup. I have extensively gone through their docs so I know I'll be able to install it.

- AI risk

- I have below-average technical knowledge on:

- US and international law.

- Youtubers and journalists might want to understand their legal exposure, for soliciting, processing or publishing leaked information. It's possible their existing legal team is poorly equipped for this.

Knowledge bottleneck for both projects

- The biggest bottleneck by far is that I currently lack legal knowledge.

- Will need to onboard someone with legal knowledge, either as an advisor, or full-time.

- I want as much of the whistleblower guide as possible to be backed by either historical evidence or a consensus of experts.

- My personal intuition should only be relevant in sections where neither historical evidence or a consensus of experts exists.

- If multiple experts disagree on the best advice, it may even be possible to post both pieces of advice side by side.

- I'm currently undecided on if this is a good idea, but it is an option available to me.

- Even if I don't publish view of people I disagree with, they are free to publish their views on their own.

Team

Currently just me (Samuel Shadrach).

My bio

- Indian citizen, born and resident in India.

- Made research notes including those that convinced me to work on this project. 2025

- Wrote AI-based search engine for books. 2024-25

- Attempted building EA IIT Delhi community. 2024.

- Completed IIT Delhi, BTech and Mtech, biochemical engineering and biotechnology. (Considered among top 2 engineering colleges in India.) 2018-2023

- Completed ML Safety Scholars (40h/week, 9 weeks) under Dan Hendrycks, then at UC Berkeley. 2022

- Worked at Rari Capital, managed risk framework for $1 billion AUM in cryptocurrency lending/borrowing. 2021-22

- Worked at Market.xyz, helping with founding and risk framework for cryptocurrency lending/borrowing. 2021-22

Previous track record of team (detailed)

-

Research on surveillance and whistleblowers - Partially successful, most relevant to this funding application

- All links published here

- Wrote detailed review and criticism for SecureDrop, an existing solution in this space used by many of the top corporate media outlets in the US including the Guardian, New York Times, etc

- Wrote about potential improvement to ecosystem for whistleblowing

- Wrote about long-term societal implications of lots of information coming out in public

- Wrote about mitigating downsides such as weapons capabilities becoming open source

- I consider this ongoing research project, not completed.

-

Wrote AI-based search engine for books. - Successful

- More notes on this on my website

- Still think this app is valuable for a niche of researchers. Haven't prioritised user acquisition due to shifted life priorities.

- Learnings

- Iterated through 5-10 different failed approaches before I got the current approach working. Improved as a software developer as a result.

- Obtained very fine-grained view of AI capabilities in 2025, including the capability differences between finetuning, RL and embedding search. As of 2025, embedding search is still by far the most economically useful. Main reason is error rate is lowest when end user can directly refer to ground-truth data.

-

Full-time travelling, including teaching english at a school in vietnam for 1.5 months - Successful

- Learnings

- Accomplished personal goals. (Not elaborating here as this is a professional document.)

-

Completed ML Safety Scholars under Dan Hendrycks, UC Berkeley - Successful

- Spent 40 hours / week, 9 weeks studying deep learning and safety under Dan Hendrycks

- Multiple pytorch assignments training our own models, and multiple video lectures that I found very high-quality.

- Learnings

- Better technical understanding of deep learning also enabled me to form more accurate views on AI timelines and AI x-risk.

- I have now published my latest views on AI timelines, intelligence explosion and so on on my website, including rebuttals to Ajeya Cotra's evolutionary anchors, Yudkowsky's certainty on intelligence explosion, and so on. AI timelines, Intelligence explosion

-

Started EA IIT Delhi community, with CEA UGAP funding - Not very succesful

- Influential in ensuring ~10 people from my college attended EAGx Jaipur. Zero full-time employees of EA orgs recruited.

- Ran weekly meetups to complete intro to EA course.

- Multiple members of EA IIT Delhi are now aiming to work on AI SaaS startups. Other members are currently doing higher studies in AI-related fields in UK and US respectively.

- Learnings:

- I significantly underestimated the extent to which people I'm talking to are facing pressure to pick standard career paths, including financial pressure and social pressure from family.

- If I could go back and do it again I would filter hard on these criteria from day one, and prefer finding 1 person who is both passionate and financially privileged, over 10 people with lukewarm interest.

- Even people working full-time on accelerating AI and think AI risk is a real problem might not switch career paths unless they've done the psychological work to become resistant to this social pressure.

-

Managed risk framework and operations for Rari Capital and Market.xyz - Partially successful

- Designed risk framework for managing $1B in cryptocurrency at peak

- Worked on risk framework. No major loss of funds due to lending/borrowing risk, indicating my risk framework did not fail. (There was loss of funds due to smart contract hack, but that was out of scope of my work.)

- Operations work. Helped scale team from 6 to 15 team members via outreach and vetting of applicants, including via short-term projects.

-

Cracked JEE Mains and Advanced to study at IIT Delhi - Successful

- Standard competitive exam for most STEM degrees in India

- Obtained All India Rank of ~4000 out of over 1 million annual applicants.

- This required multiple years of full-time coaching and preparation.

Location

If funded, all team members will be located in the US for the next 1 year.

[REDACTED]

All details not published for strategic reasons. Message me for more details.

Looking for cofounder

- Alignment

- High level of commitment is most important criteria. Cofounder should believe in the project enough that they find a way to complete it even if our cofounder relationship breaks apart and current funder pulls back funding, for example.

- Knowledge

- I prefer someone with background in either international law or journalism, and someone who is not completely against tech (being neutral or positive is OK). This is not mandatory.

- Citizenship

- [REDACTED] Message me for more details.

Existing projects and weaknesses

-

EA projects

- Most EA funding currently goes to:

- technical AI research

- I am supportive of technical AI alignment research but bearish on most of it working out given the timeframe of 5 years.

- I am more optimistic on AI ban and international coordination, than I am on most alignment research agendas.

- Out of the alignment research agendas I'm most optimistic on AI boxing. This too will only work until some level of superintelligence, and eventually international coordination to pause or ban research is needed.

- supporting AI policymakers

- By 2026-27, AI will likely become a top item of national security interest of both US and China.

- Convincing US policymakers is less helpful, if the policymakers are attempting to act against the perceived self-interest of both the US Congress and Senate members, and the perceived self-interest of leaders of US intelligence community,

- 99% of humanity is still broadly unaware of the AI risk arguments as presented by Yudkowsky and others, despite billions in funding for AI risk. Movements that aim for mass public support are able to get more awareness for less money spent IMO.

-

Existing whistleblower guides

-

My generic view on most existing whistleblower guides

- Generally supportive

- Mostly written by citizens of US resident in US. This has obvious chilling effect on what they can publish in public.

- Some of these guides have a lot of useful information. I have read all the above guides in detail.

- Most of their guides are useful for a whistleblower who does not leak classified information, and stays within the US for a legal defence.

- They have a lot less public information on whistleblowers who escape the country (such as Snowden or Assange) or leak classified information. Such whistleblowers need legal expertise on international law, making asylum requests, and geopolitics. I have not found a public guide with this information yet.

-

Existing tech projects

- Side note: Less than 100 devs seem to be managing the codebases for all the below listed projects. This all by itself seems like an attack vector to me. I have not verified exact figures.

- Tails (owned by Tor project)

- Highly supportive

- It acts as a foundation for this project

- Tor browser

- Highly supportive

- It acts as a foundation for this project

- SecureDrop (uses pgp and Tor under-the-hood)

- Highly supportive

- See my document on this, for a detailed review and criticism of SecureDrop

- Biggest weakness is that it is only used by journalists inside of the US geopolitical sphere of influence (North America, Europe, Australia, etc). Not used by journalists in India, China, Russia, Israel for example.

- Second biggest weakness is that it does very little to improve opsec of whistleblower, and primarily improves opsec of journalist. IMO this is not great, as it does not earn trust of whistleblower who is more likely to go to prison. (Multiple previous case studies including Reality Winner, where journalists have been careless about whistleblower privacy.)

- Signal

- Highly supportive

- For this project, I think Signal is not optimal, atleast as it works today.

- SecureDrop or similar approach may still be best for this project. Journalist and youtubers can run their own onion servers without involving any third party server.

- Posting messages to Signal's servers is slightly better than posting encrypted messages on some random third party server, like how say, dark web vendors do currently. Signal takes some measures such as sealed sender (which I like) and secure enclave (which I don't like) to reduce metadata they collect on their server.

- Signal doesn't run well on tails, some of its features are mobile-native. For instance it only does phone number registration, no anonymous method such as cryptocurrency payment or PoW hashes. Waiting for this to get fixed.

- I might write a detailed review of Signal sometime.

- Protonmail

- Highly supportive

- For this project, Protonmail does not offer significant security features compared to just using gmail. Can assume both maintain logs and will cooperate with law enforcement. The main feature I like is that Protonmail offers anonymous registration using cryptocurrency.

- Bare minimum opsec: Protonmail + PGP + tails

- Bare minimum that any journalist or youtuber should be offering IMO, even if they don't have full-time effort to devote to running servers or studying cybersecurity.

- Lack of technical knowledge is the only reason I can see why journalists and youtubers don't offer this.

-

Existing journalist projects

- The Intercept

- I take neutral and not positive opinion on their work today

- Was founded with help of Laura Poitras and Glenn Greenwald, who worked with the Edward Snowden leaks

- Both of them ended up resigned due to lapses of opsec on the part of the Intercept editors, which ended with Reality Winner being imprisoned

- Both journalists are now working independently.

- Glenn Greenwald runs an independent news channel on Rumble.

- Independent SecureDrop servers

- Some journalists such as Stefania Maurizi and Kenneth Rosen run SecureDrop servers independent of any corporate-funded media org.

- Stefania Maurizi has worked with both Assange and Snowden previously.

- Wikileaks

- Highly supportive

- Assange has recently been released from prison after few years in UK prison and few years in Ecuador embassy.

- His latest statement after being released does not indicate any plans to continue operating wikileaks.

- Likely has extensive expertise relevant to this project, but may not be able to publicly provide it.

-

Existing whistleblowers

- With enough branding, it may be possible to contact existing govt whistleblowers for their expertise.

- Edward Snowden

- Likely has extensive expertise relevant to this project, but may not be able to provide it. Putin's terms for asylum explicitly included not carrying out any further leaks.

- See list of case studies for more.

Theory of change

Theory of change for project 1

- We publish a high-quality guide supporting whistleblowers

- We make the guide popular in relevant circles

- => a) Whistleblowing without going to prison becomes less risky, and b) whistleblowers feel they have moral support from our team, and c) whistleblower becomes better calibrated on success likelihood of this plan, which is above 50% IMO.

- => Increased probability that a whistleblower comes forward and leaks information.

- => Leaked information gets published.

- => Citizens in multiple countries are convinced AI risk is a top priority issue. More people within government of US and China are convinced AI risk is a top priority issue. They get an accurate picture of the situation as it plays out in real time.

- => "Common knowledge" is established. It becomes more in the self-interest of anyone in US Congress/Senate to take steps on the issue.

- => Complete global ban on AI research.

Theory of change for project 2

- We allow more youtubers to safely handle classified information

- Case 1: No hot war between US and China or Russia

- Existing journalists in US may already be ready to publish the information. For instance those hosting SecureDrop servers.

- However they are likely to publish a political piece that aligns with the media outlet's perceived self-interest, and they are less likely to publish the original documents.

- => We will ensure original documents are published, by putting US corporate media in competition with youtubers inside and outside US

- => If original documents get published, citizens in US and China get a more accurate picture of the situation as it plays out in real time.

- => Similar theory of change here onwards as project 1

- Case 2: Hot war between US and China or Russia. All US journalists are gagged from publishing leaked US classified information

- Only journalists outside US geopolitical sphere of influence may be safely able to publish the information

- => Our project may be critical in ensuring the information is published at all.

- => Similar theory of change here onwards as project 1

Whistleblowers are disprortionately important for changing everyone's minds (collective epistemology)

-

I generally think the average human being is bad (relative to me) at reasoning about hypothetical futures, but not that bad (relative to me) at reasoning about something with clear empirical data.

-

Imagine two hypothetical worlds. Which one leads to more people being convinced of the truth?

- One world in which lots of people speculated about NSA backdoors in fiber optic cables and operating systems and so on but no actual backdoors were found, and no real empirical data for it.

- And another world in which nobody speculated about it, but a whistleblower like Snowden found clear empirical evidence for it.

-

I also think if you're inside an org that has lot of collective attention, you will also get a lot of collective attention.

- Case study: As of 2025-01, Geoffrey Hinton has been covered by a lot of mainstream news channels. For most people on Earth who are now introduced to AI risk as a serious topic, I'm guessing this their first introduction.

- Case study: As of 2025-05, Daniel Kokjatilo's predictions have been read by people inside US executive branch

- Most AI risk advocates have probably not acquired same amount of attention as either of these two, despite spending lot more of their energy and attention in a attempt to acquire it.

-

I think one single whistleblower providing clear empirical evidence of incoming AI risk might end up convincing more people than hundreds of people making speculative arguments.

Red-teaming theory of change

Here is a giant list of reasons why people might not want to fund this.

(You can add a comment to upvote any reason or add a new one. I will add more arguments and evidence for whichever points get upvoted by more people.)

-

US intelligence circles will significantly underestimate national security implications of AI, lots of information about AI companies will not become classified - Disagree

- I think AI will likely be the number one national security issue of the US by 2026 or 2027 and lots of important information will get classified soon after.

- I'm putting more evidence here because this argument got upvoted.

- Attention of govt and intelligence circles

- Paul Nakasone

- ex-Director of NSA, now on OpenAI board

- Recent talk by Paul Nakasone

- Says: Cybersecurity, AI, and protecting US intellectual property (including AI model weights) as the primary focus for the NSA.

- Likely a significant reason why he was hired by Sam Altman.

- Timothy Haugh

- ex-Director of NSA, fired by Trump in 2025

- Recent talk by Timothy Haugh (12:00 onwards)

- Says: Cybersecurity and AI are top challenges of US govt.

- Says: AI-enabled cyberwarfare such as automated penetration testing now used by NSA.

- Says: Over 7000 NSA analsysts are now using LLMs in their toolkit.

- William Burns

- ex-Director of CIA

- Recent talk by William Burns (22:00 onwards)

- Says: Ability of CIA to adapt to emerging technologies including large language models is number one criteria of success of CIA.

- Says: Analysts use LLMs to process large volumes of data, process biometric data and city-level surveillance data.

- Says: Aware of ASI risk as a theoretical possibility.

- Says: CIA uses social media to identify and recruit potential Russian agents.

- Avril Haines

- ex-Director of National Intelligence, ex-Deputy Director of CIA, ex-National Security Advisor

- Recent talk by Avril Haines

- Says: major priority for her is US election interference using generative AI in social media by Russia and China

- US policy efforts on GPU sanctioning China

- Significant US policy efforts already in place to sanction GPU exports to other countries.

- China is currently bypassing export controls, which will lead US intelligence circles to devise measures to tighten export controls.

- Export controls are a standard lever that US policymaking and intelligence circles pull on many technologies, not just AI. This ensure US remains in frontier R&D of most science, technology and engineering.

- Attention of Big Tech companies

- Leaders of Big Tech companies, including Jensen Huang, Satya Nadella, Larry Ellison, Reid Hoffman, Mark Zuckerberg, Elon Musk and Bill Gates have made public statements that their major focus is AI competitiveness.

- Elon Musk

- Elon Musk is explicitly interested in influencing US govt policy on tech.

- As of 2025-05, Elon Musk likely owns the world's largest GPU datacenter.

- Has publicly spoken about AI risk on multiple podcasts

- Mark Zuckerberg

- As of 2025-05, open sources latest AI models

- Has previously interacted with multiple members of Senate and Congress

- Has publicly spoken about AI risk on multiple pdocasts

- People who understand nothing about AI will follow lagging indicators like capital and attention. This includes people within US govt.

- Capital inflow to AI industry and AI risk

- OpenAI, Deepmind and Anthropic have posted total annual revenue of $10B in 2024. This implies total market cap between $100B and $1T as of 2024. For reference, combined market cap of Apple, Google, Microsoft and Facebook is $10T as of 2025-05.

- All Big Tech companies have experience handling US classified information. Amazon and Microsoft manage significant fraction of US government datacentres.

- If you believe AI in 2027 will be signifcantly better than AI in 2024, you can make corresponding estimates of revenue and market cap.

- Attention inflow to AI industry

- OpenAI claims 400 million weekly active users. This is 5% of world population. For reference, estimated 67% of world population has ever used the internet.

- As of 2025-05, Geoffrey Hinton speaking about AI risk has been covered by mainstream news channels across the world, which has significant increased the fraction of humanity that is aware of AI risk. (You can test this hypothesis by speaking to strangers outside of your friends-of-friends bubble.)

-

AI capability increases will outpace ability of US intelligence circles to adapt. Lots of information won't become classified. - Weakly disagree

- I have low (but not zero) probability we get ASI by 2027. If we get ASI by 2030, I think there's enough time for them to adapt.

- Classifying information is possible without significant changes in org structure or operational practices of AI labs. This means it can be done very quickly.

- Classification is a legal tool.

- The actual operational practices to defend information can take multiple years to implement, but this can come after information is already marked classified in terms of legality.

- US govt can retroactively classify information after it has already been leaked.

- This allows the US govt to pursue a legal case against the whistleblower under Espionage Act and prevent them from presenting evidence in court because it is now classified information.

- The specific detail of whether information was classified at the time of leaking is less important than whether it poses a national security threat as deemed by US intelligence circles. (Law does not matter when it collides with incentives, basically.)

- Case studies

- Mark Klein's 2006 leak of AT&T wiretapping - retroactively classified

- Hillary Clinton 2016 email leak - retroactively classified

- Abu Ghraib abuse photographs 2004 - retroactively classified

- Sgt. Bowe Bergdahl 15-6 investigation file 2016 - retroactively classified

-

Opsec requirements to protect yourself from the US govt is very hard, and my current level of technical competence is not enough to add value here. - Disagree

- The whistleblower guide I'm writing explicitly states that the strategy is leaving the US and publishing, not staying anonymous indefinitely. It is assumed the NSA or AI lab internal security will doxx you eventually.

- A lot of people with cybersecurity background are IMO too focussed on ensuring anonymity indefinitely, which I agree is very hard. My strategy requires as much preparation of legal and media training aspects as it does cybersecurity, because it is not a strategy that relies on indefinite anonymity.

- IMO there's atleast 50% chance a top software developer at an AI company can pull this off even without a guide.

- Part of the value of the guide is simply reminding developers that succeeding at this plan is hard but not impossibly hard, and that with some preparation there's a high probability they can pull this off.

- Increasing probability of success from say 50% to 70% makes this guide worth publishing for me. There's a strong case IMO for why this success probability increase directly correlates with reduction in AI x-risk probability.

- One whistleblower's publication of empirical real world data could be worth more for collective epistemology than hundreds of high-status people making speculative arguments.

-

Should support whistleblowers coming out publicly in the US, instead of going to another country to release the documents - Disagree

- I agree that for people who don't leak classified information, staying in the US with a legal defence is an option.

- That requires a very different guide from this one, and it may be worth writing that too. Once I'm done writing this guide I might consider writing that one too.

- There's also a lot more empirical data for that type of guide, because there are more such cases.

- Case studies

- Every single example of leaking US government classified information in past 30 years has led to the whistleblower getting imprisoned, if they leaked US classified information

- Example: Reality Winner, Chelsea Manning

- Only one person leaked US classified information and escaped prison.

- Examples of people who were not imprisoned despite leaking US classified information are >30 years old.

- Example: Daniel Ellsberg, maybe Perry Fellwock

-

Should privately support whistleblowers leaking classified information, but publicly not talk about leaking classified information - Disagree

- Many aspects of the plan depend critically on whether classified information is leaked or not, as most whistleblowers who don't leak classified information don't get imprisoned, and all whistleblowers who leak classified information get imprisoned.

- Having clear writing and thinking on this issue is extremely important. I can't muddy it by replacing "classified" with some euphemism for it.

- I would like to earn the trust of a potential whistleblower without deception.

- In case of a conflict of interest between doing what is right for the whistleblower, versus doing what is right for the journalist / lawyer / funder / etc, I will prioritise the whistleblower.

- Whistleblowers are the people most likely to go to prison, and this is a factor influencing why I prioritise earning their trust over everyone else's.

-

Writing such a guide is too hard. Any whistleblower who needs your guide is going to get themselves arrested anyway. - Disagree

- Since the plan involves leaving the US, I think it's possible for a potential whistleblower to make some opsec mistakes and still escape with their life.

- The guide explicitly states that indefinite anonymity is not possible, and what is at stake is only the time duration before they get doxxed.

- The guide needs to transmit both the opsec mindset, and a specific set of opsec rules. If both are successfully transmitted, the whistleblower can then attempt to adapt any part of it to their unique circumstances.

- Transmitting opsec mindset, not just a set of opsec rules, is hard to do in a short amount of time. I still think it is worth figuring out how to do this. A lot of employees at OpenAI/Anthropic/Deepmind/etc are software developers and teaching them security mindset may be easier to do.

- A high-quality guide for this set of circumstances does not exist on the internet as far as I know. There are a lot of opsec guides on the internet for everyone from software developers to non-classified whistleblowers to dark web drug vendors to hackers. Obtaining information from those guides and distilling it into a clear set of rules requires time and energy the whistleblower may not have. Most likely, they will benefit from a ready-to-go guide.

- The guide will have to be regularly updated with new information as security landscape changes.

-

Hot war between US and China/Russia is very unlikely. US journalists and youtubers can be trusted to publish the documents, non-US journalists don't need to be involved. - Disagree

- I agree there is a significant probability no hot war happens. I think hot war has atleast 10% chance, and is worth preparing for.

- I'm guessing our actual disagreement is on how likely superintelligent AI is to be built in the first place, or something similar.

- It is obvious to me why a intelligence community and AI lab that has succeeded at building aligned superintelligent AI will try to disable the military and nuclear command of the other country, for instance by cyberhacking, hyperpersuasion, bioweapons, nanotech weapons or so on.

- Even if superintelligent AI has not yet been built, if your country has significant chance of building it first, it makes game theoretic sense to pre-emptively escalate military conflict.

- If any country's govt actually gets convinced that superintelligent AI is likely to cause human extinction, they might pre-emptively escalate military conflict to get other govts to stop building it.

- US journalists will have specific pro-US govt biases in how the publish about the piece.

- This could make it harder to convince the general public outside the US of the issue, even they are aware of it.

-

Publishing original redacted documents is not necessary. Journalists writing a propaganda piece on the issue without publishing documents is fine - Disagree

- Counterexamples:

- Kelsey Piper at Vox publishes redacted documents.

- Snowden insisted on transferring documents to journalists and picking journalists who will report the truth honestly and choose what to disclose.

-

Supporting independent whistleblowers is useful, but supporting independent cyberhackers is not useful - Disagree

- Choosing not to support independent cyberhackers significantly reduces the amount of information that can be published.

- Case study

- Guccifer 2.0 leak revealing private emails of Hillary Clinton about Bernie Sanders was most likely obtained by cyberhacking not by a whistleblower

- This had non-negligible influence on 2016 US election

-

Whistleblower providing clearcut evidence will not lead to an AI ban - Disagree

- Examples of specific evidence a whistleblower may uncover:

- Latest capabilities of AI models, including real examples of AI being using for persuasion (including hyperpersuasion), cyberhacking (including discovering novel zerodays in hardware or software), bioweapons R&D (including inventing novel bioweapons), designs for hypothetical future weapons, and so on.

- Private notes such as diaries and emails where leadership of AI company or leadership of government talks about their true values, including whose lives they prioritise above whose.

- Example: 2016 DNC email leak uncovered some of Hillary Clinton's true thoughts on her campaign backers or on Bernie Sanders. This led to non-zero influence on US election in 2016.

- Example: Assange's collateral murder video uncovered true thoughts of those involved in the shooting. This led to non-zero influence on anti-war protests in US, but has not yet significantly changed US foreign policy.

- Since ideologies (including moral ideologies) associated with AI risk are more extreme, the uncovered information on true values of leaders could also be more extreme.

- Full causal chain behind a certain decision, including all the decison-makers involved and how certain stakeholders conspired to take control away from other stakeholders.

- Example: Snowden explains in detail how the US Supreme Court, members of Congress and Senate, judges on the FISA court, US inspector general, internal reporting channels within NSA were all systematically used or hacked, in order to keep secret the extent to which NSA surveillance has pervaded. This has not yet lead to major changes in this issue.

- There may be a similar causal chain behind important decisons taken using AI, where many nominal decision-makers in US democracy are routed around and power is centralised in the hands of a very small number of people.

- See also: AI governance papers posted on Lesswrong on AI-enabled dictatorships and coups.

-

Instead of mass broadcasting whistleblower guide, consider passing a message to AI employees privately - Maybe

- Someone else can take this strategy while I follow my strategy. I think both are worth doing.

- Me starting my project could inspire someone else to collaborate or start similar projects.

-

Finding journalists in non-US-allied states who cover tech accurately and can adopt latest tech tools may be difficult - Agree

- Agree, but still think it is worth trying.

-

Legal expertise is currently missing on the team - Agree

- Will need to collaborate with someone with legal expertise

Moral

It is possible there is misalignment of moral values between me and the funder. This is discussed here.

-

Should not leak classified info, breaking US law is morally wrong - Disagree

- Moral disagreements are hard to resolve, I don't know of any one paragraph answer that is likely to convince you. Maybe post your specific disagreement somewhere and I can have a look.

-

Supporting whistleblowers is morally correct but supporting independent cyberhackers is morally incorrect - Disagree

- Both will necessarily leak important information of people without their consent.

- Moral disagreements are hard to resolve, I don't know of any one paragraph answer that is likely to convince you. Maybe post your specific disagreement somewhere and I can have a look.

-

Private lives of people at the companies might get leaked and this is bad. - Disagree

- I assume that the whistleblower or the journalist will often redact information that is not politically important, in either case. Private lives of people can still be redacted.

- It is possible that both the whistleblower and the journalist choose to not redact some information. I am okay with this happening in the worst case, and I understand why some people might not be okay with this.

- I think downsides of a world with powerful organisations able to keep secrets are worse than downsides of their private lives also being revealed to public.

- Case studies

- Wikileaks has previously posted private life details such as evidence a politically influential person hired sex workers.

Legal

Project 1

[REDACTED]

All details not published for strategic reasons. Message me for more details.

Project 2

- Providing journalists and youtubers with opsec tools such as SecureDrop is legal in most countries including US and India.

- Journalists and youtubers can face legal repercussions for publishing leaked documents. However the team that provides them opsec and training is not likely to face significant repercussions.

Relationship between me and funder

If you are an existing funder active in this space, there may be multiple conflicts of interests involved with funding me.

- Supporting me versus supporting alignment researchers and policymakers inside AI labs.

- Supporting whistleblowers versus supporting journalists.

- Supporting me versus reducing your legal exposure.

You can anonymously donate me XMR if you would like to avoid entangling your reputation with mine.

Do not donate:

- if your expected networth in next 5 years is below $500k. There's a possibility you need this money more than me.

- if you feel you have obtained most of your money by morally grey or immoral means. This is true whether or not I ever get to know how you obtained your money.

I pre-commit to not accepting funding publicly from Dustin Moskowitz or Jaan Tallinn until they can personally have a 30-min private conversation with me around conflicts of interest.

[REDACTED]

All details not published publicly for strategic reasons. Message me for more details.

Deprioritised

I'm not pursuing below projects as of 2025-06.

Other projects that also fit in the broad cluster of leaking, publishing and popularising info in one nuclear state, that is difficult to publish and popularise in another nuclear state.

- Improving independent cyberhacking - contributing knowledge or funding, etc

- Legally grey data collection - doxxing social media accounts of powerful individuals, obtaining drone/cctv footage of them, etc

- Improvements to airgapped tech - improved pgp tool, improvements over Tor, dead drop coordination system, reviews of faraday cages, etc

- Open source search engine - open source web crawlers, open source LLM embedding models (without advancing state of the art), etc

- Bypassing geopolitical barriers on internet - improved browser tools for language translation, pay-to-get foreign SIM card, etc

- Starting a popular youtube channel myself

- Starting hard-to-censor social media platform or distributed social media platform

Subscribe / Comment

Enter email to subscribe, or enter comment to post comment